Beyond the Opportunity Landscape: Seeing What Others Miss

Why most organizations misread opportunity, chase the wrong signals, and stay blind to the progress customers really want.

Tony Ulwick’s Outcome-Driven Innovation (ODI) framework is one of the most precise tools for prioritizing customer needs. His formula:

Opportunity Score = Outcome Importance + max(Outcome Importance – Outcome Satisfaction, 0)

is simple, intuitive, and analytically sound. It identifies gaps where customers are underserved and opportunities for innovation exist.

Yet, anyone working on strategic innovation today quickly realizes: this formula is just the beginning. It measures gaps, but not their significance. It tells you where it hurts, but not where innovation delivers real strategic impact.

In this article, I’ll explain why Ulwick’s formula is strong but limited. I’ll show the dimensions it misses and how you can extend it into a Next-Gen formula that incorporates context, emotion, and strategic fit—and translates directly into actionable problem prompts for the Solution Zone.

The Genius of Tony Ulwick

The ODI formula is based on two variables:

Importance: How critical is the desired outcome for the customer?

Satisfaction: How well is this outcome currently met?

The difference represents the unmet need—the foundation for innovation. The max(...,0) ensures over-served outcomes are ignored—logical and elegant.

Why it works so well

Simplicity: Anyone can understand it.

Objectivity: Scores are based on customer perception, not company assumptions.

Quantifiability: Enables systematic ranking of outcomes across large datasets.

Segmentable: Scores can be analyzed per market segment to uncover differentiated opportunities.

In short, Ulwick forces us to see customers as goal-directed systems: people trying to make progress. This is a paradigm shift from feature-based innovation.

Limitations of the Classic Formula

Despite its strengths, the ODI formula has critical gaps that matter in practice.

Lack of Context Sensitivity

ODI assumes importance and satisfaction are static. In reality, both change with situation, experience, and usage context.

Example: A team initially sees “speed of onboarding” as irrelevant. After using the tool, it suddenly becomes critical. Classic ODI misses this dynamic.

Emotional and Social Jobs Are Ignored

Ulwick focuses on functional outcomes: “Reduce time,” “Increase accuracy.”

But real decisions are often driven by emotional or social progress: trust, confidence, status, belonging.

Example: Users want to feel confident choosing an AI solution. It’s not just a functional gap—it’s an emotional one, essential for adoption.

Strategic Fit Is Missing

ODI scores are market-neutral. They say nothing about whether your company can realistically deliver the solution.

A highly relevant opportunity may be strategically impossible, expensive, or misaligned with your capabilities.

Example: Identifying an opportunity in AI ethics may be critical to users, but if your company lacks expertise and brand credibility, the chance may not be actionable.

Market and Competitive Dynamics Are Ignored

Two outcomes with the same score may differ drastically in strategic opportunity, depending on the competitive landscape.

Example:

“Reduce time to compare AI tools” → Score 14, but the market is saturated with comparison platforms.

“Feel confident in choosing the right AI tool” → Score 14, but few competitors exist → true white space.

Frequency and Prevalence Are Not Considered

An outcome may be extremely important to a small group but irrelevant for the majority. ODI measures importance but not how widespread the need is.

No Meaning-Making or Narrative

ODI delivers numbers, not language. Teams need stories to communicate opportunities internally, align stakeholders, and define the problem in the Solution Zone.

No Link to Solutions or Experiments

ODI stops at measurement. It doesn’t connect to hypothesis formulation, opportunity framing, or testing, which are crucial in modern innovation workflows.

The Next-Gen Opportunity Formula

To address these gaps, we extend Ulwick’s formula with Context Fit, Emotional Relevance, and Strategic Fit:

Next-Gen Opportunity Score = (Importance + (Importance – Satisfaction)) × Context Fit × Emotional Relevance × Strategic Fit

Explanation of Each Dimension

How it works:

Multiplying the modifiers captures synergy: opportunities that score high across all dimensions stand out, while low fit in any dimension reduces priority.

To prevent inflated numbers, the final score might be additionally normalized, e.g., scaled to 0–100.

Alternatively, weighted addition can be used for executive-friendly interpretation, but it reduces the synergistic effect of high-alignment opportunities.

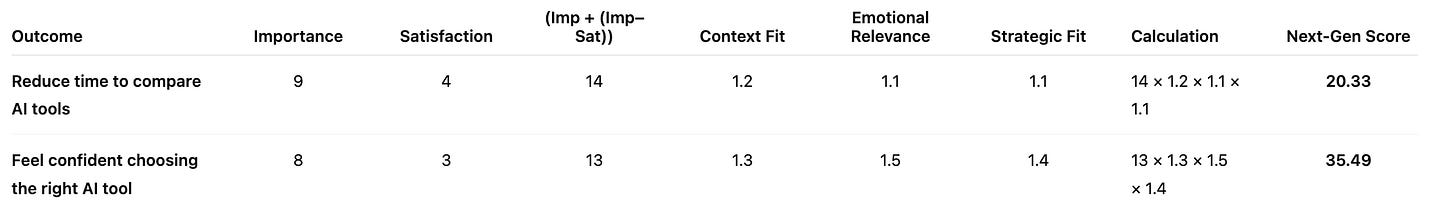

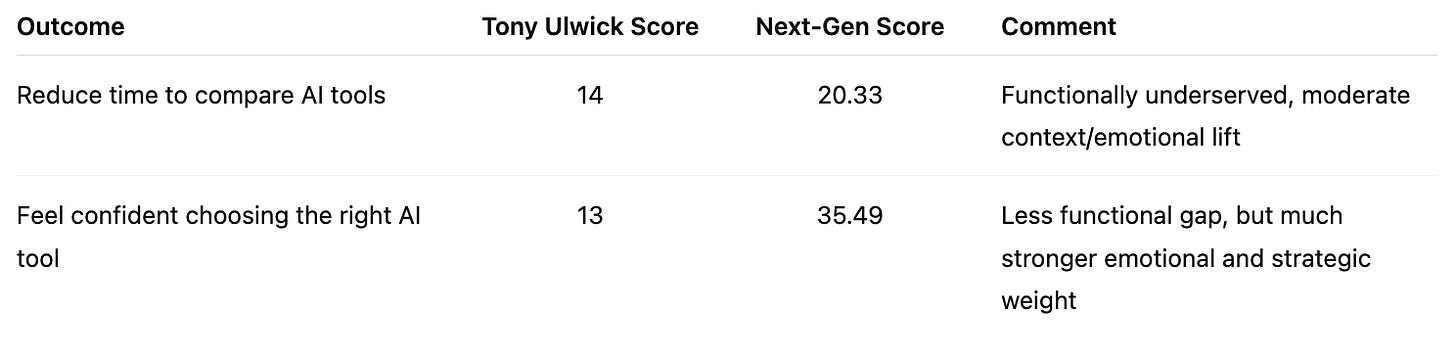

Example Calculation

Interpretation

Both outcomes are underserved. But the Next-Gen Score immediately reveals which opportunity carries strategic weight.

From Score to Problem Prompt

The crucial step Tony never makes is translating the opportunity into a problem definition for the Solution Zone.

Opportunity Hypothesis Template:

People trying to [Job Step]

struggle to [Pain/Barrier]

because [Underlying Reason]

and wish they could [Desired Outcome/Progress]

Problem Prompt for Ideation:

How might we enable [User/Persona] to [achieve progress] without [pain/barrier]?

Example:

How might we enable teams to compare AI tools confidently without getting lost in marketing noise?

This creates a semantic anchor, guiding idea generation, testing, and solution development.

Why This Matters

Using only Importance/Satisfaction is a partial perspective: it identifies gaps but not impact.

Integrating context, emotion, and strategy reveals where meaningful progress happens—and for whom.

The Next-Gen formula produces quantitative yet meaning-driven guidance. It connects research thinking with creative decision-making.

Practical Application

Outcome Mapping: Cluster related outcomes.

Candidate Selection: Score outcomes using Next-Gen formula.

Opportunity Hypothesis: Translate high-priority scores into narrative hypotheses.

Problem Prompt: Formulate guiding questions for Solution Zone.

Ideation & Testing: Use prompts as the anchor for experiments.

This creates a continuous flow from insight to solution, which classic ODI alone does not provide.

Reflection and Critique of the Next-Gen Tool

Even the Next-Gen formula is not perfect. While it extends Tony Ulwick’s approach by adding context, emotion, and strategic relevance, several caveats remain:

Subjectivity: Context Fit, Emotional Relevance, and Strategic Fit rely on team judgments. Different perspectives can lead to variability.

Complexity: Multiplying multiple dimensions increases the formula’s complexity compared to Tony’s original version and can skew scores, especially with outliers.

Validation: Quantitative scores without user or market validation remain assumptions. Observations, experiments, and testing are essential.

Focus on existing jobs: The method emphasizes known or explored outcomes; radically new, unarticulated jobs may be overlooked.

Conclusion: The Next-Gen tool delivers decision-relevant prioritization, but does not replace iterative testing and learning. It is a thinking and decision framework, not a substitute for real-world solution experience.

Invitation for Further Exploration

Innovation thrives on exploration. The Next-Gen formula opens new perspectives, but it must be validated and refined:

How do different weightings affect prioritization?

Which outcomes remain hidden due to subjective scoring?

How can context and emotion be quantified more objectively?

I invite anyone interested in exploring, experimenting, or testing these questions to join me. Together, we can build a robust, practical framework for strategic opportunity evaluation.

Support in Opportunity Prioritization

If you want to:

Identify which opportunities in your organization are truly strategic,

Start innovation projects more efficiently and with clear focus,

Guide teams in translating insights into actionable problem prompts,

I provide workshops, coaching, and hands-on support.

Thnaks for this, Yetvart. It aligns very closely with the approach I teach.

I use the job story format to express the opportunity hypothesis in a way that it includes the job step, the context, the emotions and the desired outcomes all in one statement. Each element is directly informed by your research.

And yes, there needs to be a prompt for ideation. I also use the "How might we...?" format and generated it directly from the job story.

Nice work!